In 2025/12, Google will have a new architecture that has the potential to put an end to the long-standing issue of AI's “long-term memory.”Titans” and theoretical frameworks”MIRAS” was announced. This may be a big step towards a future where AI overcomes the limitations of the “Transformer” model, which forms the foundation of current AI technology, and where AI understands context like humans and continues to store huge amounts of information.

In this article, I will explain in an easy-to-understand and in-depth manner why this innovative technology is currently attracting attention, from the issues behind it, to the amazing mechanisms that Titans and Miras have, and how they will affect our future.

1. Why is AI “forgetful”? Fundamental issues faced by the Transformer model

Many of today's large language models (LLMs) were announced by Google in 2017.”TransformersIt is based on an architecture called”. Transformer incorporates a revolutionary mechanism for capturing the relationships between words in sentences using the “Attention (Attention)” mechanism, and dramatically improved the accuracy of natural language processing.

However, this powerful model also has weaknesses. It isAs the amount of information to be processed (context length) increases, computational costs explodeThat's the problem. Specifically, since the amount of computation increased in proportion to the square of the context length, it was not suitable for reading long documents spanning tens of thousands of pages or processing huge amounts of data such as genome analysis. This “forgetfulness” was a major barrier for AI to acquire truly human-like thinking.

To solve this problem, researchers have been searching for various approaches, such as recurrent neural networks (RNN) and state-space models (SSM) like Mamba, but they all had the dilemma of losing important information in the process of compressing the context to a fixed size.

2. A new idea to memorize “surprise.” Approaching the core of Google “Titans”

That's where Google's new architecture came in.”Titans”. Titans takes a completely different approach from conventional models and tries to give AI long-term memory capabilities. At its core, it lies in two groundbreaking ideas.

2-1. “Learn” memory with deep neural networks

Whereas conventional RNNs held information in fixed-size vectors and matrices, TitansThe deep neural network (multi-layer perceptron) itself is used as a long-term memory module. This makes it possible not only to simply record information, but also to “learn” important relationships and conceptual themes from the information and efficiently summarize large amounts of information without losing context. AI is no longer taking notes; it can understand and integrate the entire story.

2-2. Use “surprise metrics” to select only important information

Another unique feature of the Titans is”Surprise metricsIt's a mechanism called”. This is inspired by the mechanism of human memory. We quickly forget everyday events, but we vividly remember unexpected “surprises” and events that shake our emotions.

Titans imitates this mechanism,Information with a high degree of “surprise” that cannot be predicted from input information so far is preferentially stored in long-term memory. For example, if an image of a banana suddenly appears while reading a financial report, it will be stored as “highly surprising” information. As a result, AI selects only what is really important from a huge amount of information and efficiently constructs memories.

3. Integrate all AI models? Theoretical framework “MIRAS”

If Titans were a specific “tool,” it would correspond to a “blueprint” to support it”MIRAS” It's a framework. MIRAS is a unified theoretical framework that reassesses various approaches to sequence modeling with a single common mechanism called “associative memory.”

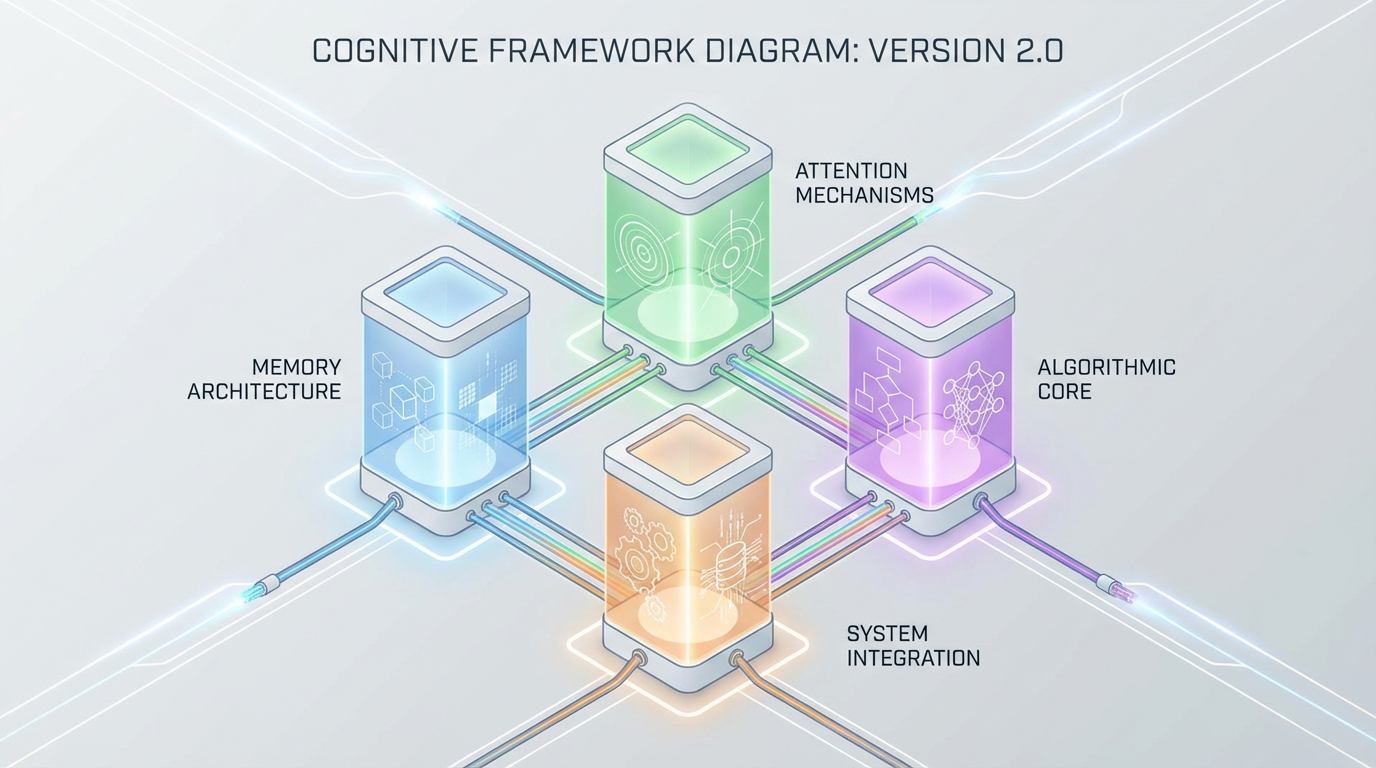

MIRAS advocates that any sequence model can be defined through the following four main design elements.

Explanation of design elementsmemory architectureStructures that store information (e.g. vectors, matrices, neural networks)attentional biasThe internal goal of what the model will prioritize learningholding gateThe mechanism of “forgetting” that balances new learning with past knowledgeMemorial GorismOptimization algorithm for updating memory

The strength of this framework is that it enables more diverse and robust model designs by breaking away from a single indicator such as mean squared error (MSE), which traditional models have relied on. In fact, Google uses MIRAS and is resistant to outliers”YAAD“It has a more stable long-term memory”MONETA”, and aimed for the best memory stability”MEMORAWe have already created new models with different characteristics, such as”.

4. Has it surpassed Transformer? The incredible performance of the Titans

So how much performance does the Titans actually have? The Google research team conducted rigorous tests comparing models born from Titans and Miras with existing major architectures such as Transformer++ and Mamba-2.

The results were astonishing. In particular, in the “BabiLong” benchmark, which measures the ability to read long sentences,The Titans showed performance that surpassed even GPT-4, which is a much larger model than itselfThat's it. Furthermore, the ability to handle extremely long sentence contexts that were previously unthinkable, exceeding 2 million tokens was also demonstrated.

These results suggest that Titans can be applied not only to text processing, but also to a wider range of fields such as genome modeling and time series prediction.

5. Summary: The future brought about by AI's “memory revolution”

“Titans” and “MIRAS” announced by Google present a very promising solution to the fundamental issue of “long-term memory” faced by AI. The new approach of learning “surprises” and dynamically constructing memories with neural networks will greatly advance the future where AI deeply understands contexts like humans and continues to learn continuously.

As this technology develops, AI will no longer just be an information processing tool, but a true partner to assist our creativity and intellectual exploration. In a few years, the concept of what we call “AI” today may be completely overturned. The AI “memory revolution” has just begun.

Bibliography

- Google. (2025, December 4). Titans + MIRAS: Helping AI have long-term memory. Google Research. https://research.google/blog/titans-miras-helping-ai-have-long-term-memory/

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N.,... & Polosukhin, I. (2017). Attention is all you need. In Advances in Neural Information Processing Systems (pp. 5998-6008).

- Nihon Keizai Shimbun. (2025, July 23). “Long-term memory AI” drawn by Google. https://www.chowagiken.co.jp/blog/titans_longterm_memory_ai

- Note. (2025, 10/27). Will it surpass Transformers? The horizon of next-generation LLM drawn by Mamba and state-space models. https://note.com/betaitohuman/n/n693953f1a70a

- GIGAZINE. (2025, 12/8). Google develops architecture “Titans” and framework “MIRAS” to support AI's long-term memory. https://gigazine.net/news/20251208-titans-miras-help-ai-long-term-memory/