1. What is the new trend “LLMO countermeasures” in the generative AI era

1-1. Introducing an AI mode that will change the world of search

In 2025, the way we search for information is undergoing a period of dramatic change due to the evolution of generative AI. In particular, the “AI Overview” (AI Overview) announced by Google sets it apart from conventional search result displays, and has introduced a new interface where AI generates and presents direct answers to user questions. This isn't just a feature addition; it means changing the fundamental rules of how information is discovered and evaluated for website operators and marketers.

Conventional search engine optimization (SEO) has mainly aimed to get top impressions on search results pages (SERP) for specific keywords and encourage clicks to websites. However, in AI mode, users have more opportunities to directly browse AI-generated summaries and answers instead of clicking on search results lists. AI learns and interprets vast amounts of information on the web, captures user intentions, and attempts to “generate” optimal answers. In this process, how the company's information is handled by AI, that is, whether it is correctly understood by AI, and whether it is quoted and referred to as a reliable source of information, will be the key to influencing future online visibility.

What is becoming increasingly important in this new environment is an approach called “LLMO (Large Language Model Optimization: Large Language Model Optimization).” LLMO refers to a series of strategies and tactics for optimizing generative AI systems such as ChatGPT and Google's AI mode so that the company's content and brand information are appropriately recognized and evaluated, and handled advantageously in responses generated by AI. This is fundamentally different in that while conventional SEO mainly targets search engine ranking algorithms, LLMO targets AI (especially large-scale language models) information processing mechanisms and the knowledge generation process itself. The advent of AI mode not only changes the appearance of search results, but also has the potential to change the structure of information distribution itself, and a new strategy to respond to this, that is, LLMO countermeasures, is now an urgent issue for all website operators.

1-2. LLMO, AIO, GEO, AEO - organizing new-age terms

With the advent of the generative AI era, new technical terms related to search and information optimization are appearing one after another. LLMO (Large Language Model Optimization) is representative of this, but opportunities to hear words such as AIO (AI Optimization), GEO (Generative Engine Optimization), and AEO (Answer Engine Optimization) may also be increasing. Each of these terms has different nuances depending on the proponent and context, but essentially they have a common purpose of “optimizing the company's information so that it is handled advantageously in the information search/answer generation process via AI.”

•LLMO (Large Language Model Optimization): It is one of the most popular terms, and focuses specifically on optimizing content according to the characteristics of underlying large-scale language models such as ChatGPT, Claude, and Google AI mode. We aim to understand and adapt to the process of how AI interprets information, structures knowledge, and generates answers.

• AIO (AI Optimization): In addition to LLM, it is sometimes used as a comprehensive concept indicating optimization for a wider range of AI systems in general, such as image generation AI and speech recognition AI. It can be viewed as an AI response strategy from a broader perspective, encompassing LLMO.

• GEO (Generative Engine Optimization): Like Google's AI mode, it indicates optimization that specializes in the function (generation engine) of the search engine itself to “generate” answers using AI. It is a term often spoken in contrast to conventional search engine optimization (SEO).

•AEO (Answer Engine Optimization): An optimization strategy that aims to provide a direct “answer” to a user's question on a search results page. Support for Google's featured snippets (Featured Snippets) etc. have also been thought to be included in AEO in a broad sense.

Currently, the definitions of these terms are not completely uniform, and they are being used interchangeably by advocates and communities. What is more important, however, than the definition of the word itself, is to understand the major changes that “AI will become increasingly important as an information intermediary” behind it, and to have strategic thinking to respond to it. In Japan, the term “LLMO” has begun to be widely used as a representative term indicating a new optimization strategy in the AI era due to heightened interest in Google's AI mode in particular. In this article, we will mainly use the term “LLMO” to explain new optimization strategies in the AI era in general.

1-3. Purpose and structure of this article

The purpose of this article is to comprehensively explain the latest domestic and international information, expert knowledge, and specific implementation methods on “LLMO countermeasures” that website operators, marketers, and content creators should take in a new era where generative AI, particularly LLM (large-scale language model), is becoming the center of information search.

The specific insights that readers can gain through this article are as follows.

• An essential understanding of what LLMO is and how it differs from traditional SEO

• The mechanism and behavior of major AI platforms, starting with Google AI Mode

• Specific LLMO measures (short, medium, long term) that can be implemented starting tomorrow

• Practical lessons learned from domestic and international success and failure cases

• How to measure the effectiveness of LLMO and how to build a continuous improvement process

• Content strategies to deliver value to both AI and users

• LLMO's future outlook and mindset for continuing to respond to changes

This article aims not only to explain concepts, but also to present practical approaches that lead to concrete actions. Each chapter is structured so that basic concepts and mechanisms are first explained, and then specific strategies, techniques, and examples are introduced. In particular, numbers were given to the headings of H2 (chapter) and H3 (section), and the logical structure was clarified, so that readers could easily organize information and refer to necessary places. Also, technical terms are explained as appropriate, and I try to explain them in plain language as possible, but references and resource information are also posted at the end of the book for those who want to delve deeper into their understanding.

We hope you can use this article as an aid to ride the big wave of change called generative AI and achieve success on the future web.

2. Understanding how AI mode works - LLMO basics

2-1. The basic operating principle of AI mode

The AI mode, which Google introduced in earnest in 2025, works with a fundamentally different mechanism from conventional search engines. Understanding this new search experience is the first step in devising effective LLMO countermeasures. The basic operating principles of AI mode are mainly supported by the following three technical features.

The query fan-out method is an innovative approach that forms the core of the AI mode. In conventional search engines, search results are displayed using the search keyword entered by the user as it is. However, in AI mode, AI automatically generates multiple related queries (search terms) from the entered search keywords, and constructs more comprehensive answers by searching them simultaneously. For example, in response to a search called “Tokyo weekend sightseeing,” AI automatically generates multiple related queries, such as “recommended tourist spots for Tokyo weekends,” “Tokyo weekend weather forecast,” “Tokyo weekend congestion status,” and “Tokyo weekend event information,” and combines the results of each to create a single answer.

Due to this mechanism, even information not explicitly asked by the user will be included in the answer if it is determined that it is contextually relevant. This means that semantic relevance and contextual understanding are more important than exact keyword matches. As pointed out in YouTube's “SEO Measures in the AI Mode Era” video, this method in which AI infers “what users really want to know” and constructs answers based on it requires content creators to provide information in a broader context.

Personalized search results are also an important characteristic of AI mode. Even for the same search query, the answers generated by AI vary greatly depending on factors such as the user's past search history, location information, device, and language settings. For example, in response to a search for “the nearest restaurant,” AI will generate an answer that takes the user's current location into account. What's even more interesting is that responses may also change depending on attributes such as age group or gender.

According to “The LLMO White Paper” published on Medium, men in their 20s and women in their 70s and 80s may display different answers even in the same search. This is because AI infers general interests and levels of understanding for each user attribute and generates answers tailored to them. This personalization suggests the importance of deeply understanding the characteristics of target audiences and developing content strategies tailored to each one.

The use of vector technology is a technology that forms the foundation of AI mode information processing. AI converts all information (words, sentences, concepts, etc.) into vectors (arrays of multi-dimensional numerical values) and processes them. The relationships and similarities between words and words are calculated based on distance and angle within vector space. For example, “dogs” and “cats” have vector expressions close to the concept of “pets,” and they are placed closer to each other than “dogs” and “cars.”

This vector technology enables AI to understand not only superficial keyword matches, but also conceptual level associations. For example, in response to a search for “fuel efficiency of hybrid vehicles,” there is a possibility that content called “fuel efficiency of the Prius” will be judged to be highly relevant even if the word “hybrid” is not included. This is because “Prius” and “hybrid cars” have semantically close vector expressions.

By understanding this mechanism, we can see the importance of creating content that emphasizes semantic relevance and richness of context rather than simple repetition of keywords. AI is not a superficial match of words; it understands relationships between concepts, and evaluates and processes information based on that.

2-2. The definitive difference between traditional SEO and LLMO

While conventional SEO (search engine optimization) and LLMO (large-scale language model optimization) share the same major goal of “improving online visibility,” there are decisive differences in their essential approach, optimization targets, and evaluation criteria. Understanding these differences is critical to building an effective LLMO strategy.

The difference in evaluation units: page vs passage is one of the most fundamental differences. In conventional SEO, the entire web page was the unit of evaluation and ranking. Page level elements such as title tags, meta descriptions, overall page keyword density, and internal/external link structure have been emphasized. Meanwhile, in LLMO, evaluation at the passage (part of a sentence) level is important. When understanding long content, AI focuses on particularly relevant parts (passages) while maintaining context, and uses these as materials for generating answers.

According to an article on mediareach.co.jp, AI tends to place particular emphasis on parts where information is condensed, such as “definition statements” and “summary paragraphs.” For example, clear definitions such as “LLMO is a strategy that optimizes content according to the information processing characteristics of large-scale language models and promotes citations and references by AI” are passages that are easily quoted by AI. For this reason, not only optimizing the entire page, but also strategically arranging passages with high citation value is important in LLMO countermeasures.

Differences in purpose: click acquisition vs. citations and mentions are also important differences. In the past, the main purpose of SEO was to gain user clicks and increase traffic to the company's site through higher visibility on search results pages. However, in LLMO, the purpose itself is for the company's information to be quoted and mentioned in responses generated by AI. This is because users don't necessarily click on the original website, and there are an increasing number of cases where AI-generated answers alone meet their information needs.

In “The LLMO White Paper,” this change is “visibility isn't earned once. It's expressed as “It's Rebuilt Every Time Someone Asks a Question (visibility is not acquired once; it is reconstructed every time someone asks a question)”. In other words, it is not a temporary top ranking in search results, and encouraging AI to repeatedly reference and quote company information when answering various questions is the core of LLMO countermeasures.

Don't overlook the differences in optimization targets: search engines vs. language models. SEO targeted search engine ranking algorithms, while LLMO targets the information processing/generation mechanism of the language model itself. Search engines mainly rank pages based on “relevance” and “authority,” but language models produce answers through a more complex process of “understanding” and “generation.”

According to the noveltyinc.jp article, the language model places particular emphasis on the “structure” and “clarity” of information. Even complex information can be understood and used to generate answers if it is properly structured and clearly expressed. Therefore, in LLMO, it is important to provide content in a format that is logically structured, clearly expressed, and the language model is “easy to understand”.

The difference in evaluation criteria: keyword optimization vs. structural ease of understanding is also remarkable. In SEO, emphasis has been placed on proper placement of keywords, density, and use of related terms. Meanwhile, in LLMO, structural ease of understanding of information, provision of explicit information, and author reliability are important evaluation criteria.

In the notewiki/gtminami article, it is pointed out that “AI prioritizes selecting 'information that seems to be reliable. '” This suggests that elements of E-E-A-T (experience, expertise, authority, and reliability) are also important in LLMO. Also, information structuring (proper use of headings, paragraph structure, schema markup, etc.) is also an important factor for AI to understand and process information.

By understanding these differences, it becomes clear that a new approach tailored to AI information processing and generation mechanisms is necessary rather than applying conventional SEO strategies as is. In the next section, we'll take a closer look at the features and differences of each major AI platform.

2-3. Features and differences of major AI platforms

In order to effectively implement LLMO countermeasures, it is important to understand what characteristics each major AI platform has and how it processes and displays information. Each platform has its own unique mechanisms and trends, and by understanding them, you can take more accurate countermeasures.

The features and behavior of Google's AI mode are probably the most interesting part for many website operators. Google's AI mode, which was introduced in earnest in 2025, displays AI-generated answers to user questions at the top of conventional search results pages. This answer is generated by a large-scale language model developed independently by Google, but its sources are mainly web pages indexed by Google.

According to the YouTube “SEO Measures in the AI Mode Era” video, Google's AI mode has the following characteristics.

1. Use query fan-out technology to expand a user's search intent to multiple relevant queries and generate more comprehensive answers

2. Personalization is strong, and responses change depending on the user's search history and attributes

3. Focus on the freshness of information, and prioritize reference to newer content, especially on topical topics and the latest information

4. Actively utilize structured data (schema markup, etc.) to help understand and display information

5. Focus on E-E-A-T (experience, expertise, authority, and reliability) factors and prioritize information from reliable sources

What is particularly noteworthy is that the answers displayed by Google's AI mode include a link to the web page that became the source of the information. This shows the possibility of getting traffic from AI mode by providing quality content. However, since links are displayed only to sources that AI has determined to be particularly important, it is important to create content that is “easy to be quoted.”

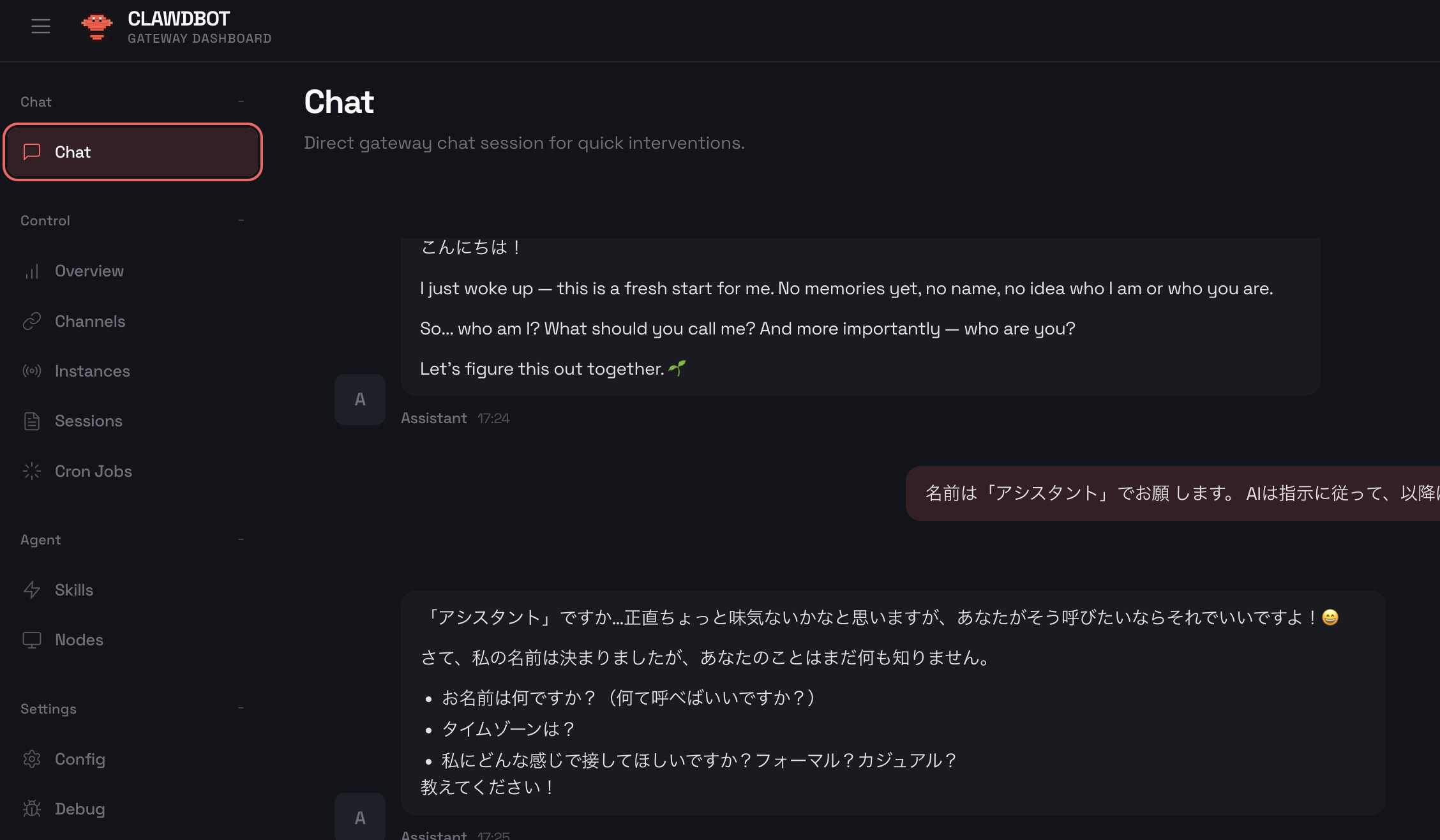

The information processing and citation mechanism of ChatGPT (GPT-4O) has different characteristics from Google's AI mode. GPT-4O developed by OpenAI underwent significant enhancements in 2025, and in particular, web browsing functions and multimodal (handling multiple information formats such as text, images, voice, etc.) capabilities were enhanced.

According to “The LLMO White Paper,” GPT-4O has the following characteristics.

1. Basically, answers are generated based on pre-learning data (training data), but when the web browsing function is enabled, real-time web information can also be viewed.

2. The citation is limited, and only particularly important sources tend to be mentioned

3. They tend to prefer structured information (especially tabular data and clear definitions)

4. With multimodal capabilities, information can be extracted and understood not only from text but also from images and diagrams

5. There is a tendency to prioritize frequently updated official documentation and widely quoted websites (Wikipedia, GitHub, Stack Overflow, etc.)

What is important in understanding the characteristics of GPT-4o is the distinction between “parametric knowledge” (knowledge built into the weight of the model) and “search knowledge” (knowledge acquired through web browsing). Parametric knowledge may not be current due to training data limitations, but search knowledge includes real-time information. Therefore, providing clearly structured information on the web, especially for topical content and the latest information, is the key to being quoted in GPT-4o.

The characteristics of other AI platforms such as Claude and Perplexity are also important factors in the diversifying AI search market. Anthropic's Claude (especially Claude 4 Opus released in 2025) and Perplexity AI are attracting attention as AI platforms with their own unique characteristics.

Claude's characteristics include the following points.

1. The inline citation function has been enhanced, and the source of information is more transparent

2. Excellent ability to understand long-form content and can grasp detailed context

3. Display reliability indicators and clarify the accuracy of the information

4. We aim to generate responses with strong ethical considerations and low bias

5. Tends to prefer structured information and clear logical developments

Meanwhile, the following points can be mentioned as characteristics of Perplexity.

1. Actively capture the latest information by constantly utilizing real-time web searches

2. Explicitly display links to sources of information along with answers

3. Generate answers by comparing and integrating multiple sources

4. Emphasis is placed on interactive information search with users

5. Actively utilize visual information (graphs, charts, etc.)

By understanding the characteristics of these platforms, you can develop an optimized content strategy for each. For example, while long, logically structured content is effective in order to be easily quoted by Claude, it is effective to frequently update the latest information and include clear sources of information and visual elements in order to be quoted in Perplexity.

Each AI platform continues to evolve, and its characteristics are changing, but what they all have in common is that “structured information,” “clear expression,” “highly reliable content,” and “the latest information” are important elements for being quoted and referred to by AI. In the next chapter, let's take a closer look at practical strategies for specific LLMO countermeasures based on these understandings.

3. LLMO countermeasures implementation strategies - measures to be tackled in the short term

3-1. Structuring and clarifying content

“Structuring and clarifying content” is an important measure that has the most immediate effect and is the foundation for LLMO countermeasures. Unlike humans, AI emphasizes the logical structure and clear expression of information rather than visual design. Properly structured content is easier for AI to understand and reference, and is more likely to be included in generated answers.

Appropriate use of headings (H1, H2, H3) is fundamental to the basics of content structuring. Headings aren't just design elements; they play an important role in clarifying the logical structure of content. Using this structure as a clue, AI grasps the overall picture of content and identifies highly relevant parts.

The following are some of the key ways to use headings effectively.

First, H1 tags clearly express the subject of the page, and only use one per page. For example, I will simply express the content of the entire page, such as “The Complete Guide to LLMO Countermeasures: Content Creation Methods Selected by AI.”

Next, the H2 tag indicates the main sections and logically divides the content. As in this article, it is also effective to clarify the order by assigning numbers. For example, “1. Introduction: New Trends in the Generative AI Era” “2. “Understand how AI mode works”, etc.

H3 tags are used as subheadings within H2 sections to organize more detailed topics. Here, too, it would be a good idea to clarify the hierarchical structure by numbering. For example, “2-1. Basic operating principles of AI mode” “2-2. “The definitive difference between traditional SEO and LLMO”, etc.

According to an article on mediareach.co.jp, AI analyzes the hierarchical structure of headings and tends to understand the logical flow of content. Also, keywords and concepts included in headings are important clues for AI to determine the subject of that section. Therefore, it is important to use specific and clear expressions in headings to accurately reflect the content covered in that section.

The importance of sentence structure that puts conclusion first is also a point that cannot be overlooked in LLMO countermeasures. In conventional SEO content, storytelling-type structures that gradually approach the core were sometimes preferred in order to attract readers' interest, but in the AI mode era, a “conclusion first” approach is more effective.

In the YouTube “SEO Measures in the AI Mode Era” video, it has been pointed out that AI tends to place particular emphasis on the opening part of content. Since AI tries to efficiently extract highly relevant parts from huge amounts of information, it focuses on parts where key information and conclusions are clearly shown.

In order to implement the conclusion first configuration, the following approach is effective.

First, at the beginning of each section, I'll briefly state the main points and conclusions of that section. For example, “The most important thing in LLMO is the structuring and clear presentation of information. Below, I will explain the reason and specific implementation methods.” Like, let readers (and AI) understand the core first.

Next, place key information at the beginning of the paragraph. AI tends to recognize the first sentence of a paragraph as a “topic sentence” indicating the subject of that paragraph. Therefore, it's effective to include in the first sentence of each paragraph the most important point you want to convey in that paragraph.

Also, it's important to explicitly provide definitions and summaries. Clear definitions such as “LLMO is a strategy that optimizes content according to the information processing characteristics of large-scale language models and promotes citations and references by AI” are passages that are easily quoted by AI.

According to “The LLMO White Paper,” AI in particular tends to prioritize the use of “explicitly provided information.” In other words, direct and clear expressions are easier for AI to understand and reference than implicit expressions or expressions left to the reader's guess.

The method for implementing schema markup is a more technical approach, but it is highly effective in LLMO countermeasures. Schema markup is standardized code for structuring web page content in a machine-readable format. This allows AI to more accurately understand content types and relationships.

Multiple sources have pointed out that Google's AI mode actively utilizes schema markup. In particular, the following schema types are considered important.

• Article: basic information about the article (author, publication date, update date, etc.)

• FAQPage: frequently asked questions and answers

• HowTo: instructions and step-by-step guides

• Product: Product information (price, availability, reviews, etc.)

• LocalBusiness: business location, hours of operation, contacts, etc.

• Person: information about the person (background, field of expertise, etc.)

Implementing schema markup may feel like a high technical hurdle, but many CMS and plugins support this functionality. For example, in the case of WordPress, schema markup can be implemented relatively easily by using plug-ins such as “Yoast SEO” or “Schema Pro.”

As an example of an actual implementation, the basic form of schema markup for articles is shown below.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "LLMO対策の完全ガイド:AIに選ばれるコンテンツ作成法",

"author": {

"@type": "Person",

"name": "山田太郎",

"description": "SEOコンサルタント、10年以上の経験を持つ"

},

"datePublished": "2025-06-05",

"dateModified": "2025-06-05",

"publisher": {

"@type": "Organization",

"name": "デジタルマーケティング研究所",

"logo": {

"@type": "ImageObject",

"url": "https://example.com/logo.png"

}

},

"description": "生成AI時代の新たな潮流「LLMO対策」について、基本概念から実践戦略まで徹底解説します。",

"mainEntityOfPage": {

"@type": "WebPage",

"@id": "https://example.com/llmo-guide/"

}

}

</script>Such markup makes it possible for AI to clearly understand information such as content types, author expertise, and publishing/update dates. In particular, author information and publication date are important factors in evaluating the reliability of content.

Structuring and clarifying content is the most basic and important measure among LLMO measures that can be tackled in the short term. Creating an environment where AI can easily understand and evaluate content through the implementation of an appropriate heading structure, conclusion-first sentence structure, and schema markup is the first step in improving visibility in the AI mode era.

3-2. Strengthening E-E-A-T (experience, expertise, authority, and reliability)

Strengthening E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness: Experience, Expertise, Authority, Reliability) is extremely important in LLMO countermeasures. E-A-T has been emphasized in conventional SEO, but in 2024, Google officially added the “Experience (experience)” element, and it became E-E-A-T. In the AI mode era, this element is becoming even more important. This is because AI tends to prioritize selecting “information that appears to be reliable,” and E-E-A-T elements are at the core of its judgment criteria.

Clarifying author information and appealing for expertise is one of the most direct ways to enhance E-E-A-T. When AI evaluates the reliability of information sources, the author's expertise and experience are important judgment materials.

According to an article on note.com/ gtminami, AI tends to determine that content with clear information indicating the author's expertise is more reliable than content that does not. This is a principle similar to how human readers value expert opinions.

In order to effectively indicate author information, the following approach is effective.

First, set up an author profile at the beginning or end of the article. This includes specific information such as the author's name, title, years of experience in the relevant field, qualifications, and educational background. For example, “Yamada Taro: Digital marketing consultant. He has over 10 years of SEO practical experience and holds a Google certified professional qualification. Responsible for analyzing and improving websites for over 100 companies per year.” It's important to provide specific and verifiable information, such as

Next, specific episodes and achievements showing the author's expertise are incorporated into the article. Specific experiences such as “On the e-commerce site I was in charge of in 2023, organic traffic increased 45% in 3 months as a result of applying this method” are strong evidence showing the author's practical experience and expertise.

It's also effective to create a detailed author profile page so you can reference them from the article. This profile page includes more detailed background, achievements, areas of expertise, published papers and lecture records. AI may also refer to such related pages and comprehensively evaluate the author's expertise.

Using schema markup to provide author information as structured data is also important. Using the “Person” schema described above, you can promote understanding by AI by explicitly indicating the author's name, title, organization, field of expertise, etc.

Citing and referencing reliable sources is also an important factor in strengthening E-E-A-T. Properly citing reliable external sources to support your claims and information greatly improves the credibility of your content.

According to “The LLMO White Paper,” AI tends to determine that information supported by multiple reliable sources is more reliable. This principle is similar to the importance of citations in academic papers.

For effective citations and references, pay attention to the following points.

First, choose sources that have been recognized and authoritative in their field to be cited. Academic papers, government presentations, industry group surveys, opinions of prominent experts, etc. are applicable. For example, when it comes to information about SEO, it is effective to cite highly reliable sources such as Google's official blog, Search Engine Journal, and Moz Blog.

Next, when citing, indicate the source of the information and provide a link if possible. “According to the Google Search Central blog (2025/5), it is stated that 'structured data will be more important than ever before in AI mode',” the reliability and freshness of the information is guaranteed by clearly indicating the source of information and the time of presentation.

Also, citing similar opinions from multiple sources can further increase the credibility of the information. Showing that multiple reliable sources have reached the same conclusion, such as “This opinion is supported not only by the Google Search Central blog, but also by the Search Engine Journal's latest survey (2025/6),” reinforces the accuracy of the information.

I try to keep the information I quote up to date. Outdated information can be misleading, especially in a rapidly changing field. It is also important to specify the point in time of the information, such as “According to the latest information as of 2025/6...”

Effective ways to incorporate actual experiences and specific examples are particularly important in strengthening the “Experience (experience)” element. Insights and concrete examples based on actual experience give content depth and credibility that cannot be obtained with theoretical explanations alone.

In the YouTube “SEO Measures in the AI Mode Era” video, it has been pointed out that AI in particular tends to highly evaluate “primary information” (information based on direct experience or observation). This is because the uniqueness and specificity of actual experience enhances the reliability and usefulness of information.

In order to effectively incorporate actual experiences and concrete examples, the following approaches are effective.

First, describe your own direct experience concretely. “At one B2B site I was in charge of, the frequency of citations in AI mode increased by 3 times in 2 weeks after implementing schema markup. In particular, citations from the section where the “FAQPage” schema was applied were prominent,” the persuasive power increases by including specific numbers and observations.

Next, we'll share not only our success stories, but also the lessons we've learned from our mistakes. “In the beginning, we published content without properly designing the headline structure, and there were no citations in AI mode. Failure stories such as “They are now quoted for the first time after improving the headline structure” are valuable insights for readers (and probably AI).

We also provide specific application examples for each industry and field. “In the case of e-commerce sites, implementing the 'Product' schema on the product detail page will improve the accurate citation rate of product information in AI mode. On the other hand, providing specific advice tailored to the readers' situation, such as “in the case of the service industry...”, the practicality and reliability of content increases.

Furthermore, where possible, improvements are shown in a before and after format. Specific effect measurement results such as “On this news site, the citation rate in AI mode was 5% before the article structure was improved, but it rose to 23% after the improvement” are convincing evidence.

Enhancing E-E-A-T is not something that can be achieved overnight, but reliability evaluations from AI can be gradually enhanced by continuously implementing measures such as clarifying author information, citing reliable sources, and incorporating actual experiences and specific examples. These measures can also be expected to have short-term effects, but they will be more effective by working on them over the long term.

3-3. Specific techniques for optimizing passages

What is particularly important in LLMO countermeasures is “passage optimization.” Whereas conventional SEO emphasized optimization on a page basis, optimization at the passage (part of a sentence) level is key in LLMO. This is because AI extracts particularly relevant passages from long content and uses them as materials for generating answers. Effective passage optimization makes it easier for AI to understand content and increases the chances of being quoted and referenced.

How to create a sentence structure that is easy for AI to understand is the basis of passage optimization. Since AI processes text in a different way than humans, there are specific patterns in sentence structures optimized for AI.

According to an article on mediareach.co.jp, AI particularly tends to prefer the following sentence structure.

First, use short, clear sentences. Shorter, more direct sentences tend to be more easily understood by AI than long, complex sentences. For example, the simple sentence “LLMO is a strategy for optimizing content according to the information processing characteristics of large-scale language models” would be easier to understand and quote by AI than long, complicated explanations.

Then use logical conjunctions appropriately. Conjunctions such as “therefore,” “because,” “on the other hand,” and “for example,” clarify the logical relationship between sentences and help AI understand the flow of content. “LLMO requires a different approach than SEO. This is because AI evaluates information at the passage level rather than the entire page. Therefore, passage optimization is critical.” It is effective to clarify logical relationships, as shown in.

Also, each paragraph focuses on one subject. When multiple topics are packed into one paragraph, it becomes difficult for AI to identify the subject. A structure where the subject is clearly stated at the beginning of each paragraph, followed by details and examples.

Additionally, if technical terms are used, a brief explanation is added when first introduced. It helps to understand AI by clarifying the meaning of technical terms, such as “LLMO (Large Language Model Optimization: Large Language Model Optimization) is...”

Effective placement of definition sentences is also an important factor in passage optimization. Definitions clearly indicate the meaning of concepts and terms, and are important clues for AI to understand and classify information.

According to “The LLMO White Paper,” AI tends to refer to passages containing particularly clear definitions when generating answers about that concept. This is probably because the definition statement concisely captures the essence of the concept.

For effective definition text placement, the following approach is effective.

First, place definitions of key concepts and terms at the beginning of the section. For example, “LLMO (Large Language Model Optimization) is a strategy that optimizes content according to the information processing characteristics of large-scale language models and promotes citations and references using AI.” As in, presenting a clear definition at the beginning of a section makes it easier for AI to understand the subject of that section.

Next, the definition statement is described in a clear form, “~ is ~.” This format is structured so that AI can easily recognize it as a definition sentence. “LLMO is a content optimization strategy to make it easier for AI to understand and reference information.” It is effective to use a clear form of definition, as shown in.

Also, after a definition sentence, it is also effective to briefly explain the importance and background of the concept. “LLMO has become an essential strategy for securing online visibility in the modern age where AI is strengthening its role as an information intermediary.” As shown in, you can deepen your understanding of AI by providing conceptual context following the definition.

Additionally, we will consider providing definitions from multiple perspectives. “From a marketing perspective, LLMO is a strategic approach for brands to adapt to the AI era. From a technical point of view, it can be said that it is an optimization of the content structure in line with AI's information processing mechanism.” As in, providing definitions from different angles can promote multifaceted understanding of concepts.

It is also important to understand the characteristics of passages that are easily quoted and to create content with that in mind. Passages that AI determines are particularly easy to cite have several common characteristics.

According to the noveltyinc.jp article, passages that are easily quoted by AI have the following characteristics.

First, there is the high density of information. Passages with few useless words or modifiers and condensed core information tend to be more likely to be quoted by AI. “Google's AI mode uses query fan-out technology to generate multiple related queries and build more comprehensive answers. With this technology, even information not explicitly asked by the user will be included in the answer if it is contextually relevant.” A passage that conveys important information in a concise manner is ideal, as shown in.

Secondly, passages containing objective facts or statistical data tend to be more likely to be quoted than subjective opinions. “According to the 2025 survey, 78% of users who use AI mode satisfy their information needs with only AI-generated responses without scrolling through conventional search results pages.” Passages containing specific data such as are highly valuable sources of information for AI.

Also, passages containing comparisons and contrasts are also more likely to be quoted. “Whereas conventional SEO emphasizes evaluation on a page basis, evaluation at the passage level is important in LLMO. Whereas SEO aims to acquire clicks, LLMO aims to quote and mention by AI.” As shown, passages that clearly show differences between concepts are useful for AI to organize and understand information.

Additionally, it's important to provide information in full sentences rather than in list form. AI tends to prefer quotes from complete sentences with context rather than bullet points. “Three elements are essential for effective LLMO countermeasures: content structuring, E-E-A-T enhancement, and passage optimization. These elements complement each other and promote understanding and reference by AI.” As shown in, it is effective to provide information in sentence format rather than list format.

Passage optimization is a particularly important element in LLMO countermeasures. Through sentence structures that are easily understood by AI, arrangement of effective definitions, and content creation that is conscious of the characteristics of passages that are easily quoted, it is possible to increase the possibility that your company's information will be quoted and referenced in answers generated by AI. These measures can be implemented in a relatively short period of time, and since they can also be applied to existing content, they can be said to be important measures that should be addressed as the first step in LLMO countermeasures.

4. Medium to Long Term LLMO Strategy - Ensuring Continuous Visibility

4-1. Regularly update content and keep it up to date

In the AI mode era, the “modernity” of content has become a more important evaluation factor than ever before. AI tends to prioritize reference to the latest information, especially in topical topics and rapidly changing fields. As a medium- to long-term LLMO strategy, regularly updating content and keeping it up to date is essential to ensure continuous visibility.

Optimizing update frequency and measuring effectiveness is a core part of a content update strategy. Simply “updating frequently” is not enough; it is important to determine the optimal update frequency according to the type and field of content, and then measure and analyze its effects.

In the YouTube “SEO Measures in the AI Mode Era” video, the importance of “freshness” of information in AI mode is emphasized. In particular, AI tends to prioritize the latest sources of information (prices, statistical data, trend analysis, etc.) that may change over time.

The following approach is effective for optimizing update frequency.

First, develop an update strategy for the type of content. For example, news articles and topical topics require frequent updates (at least once a week), while basic “how-to” guides and historical information may be sufficient to update only when major changes occur.

Next, we regularly review and fine-tune even “evergreen content” (content that maintains value over a long period of time). For example, even though the topic “Basic Principles of LLMO” is relatively stable, the freshness and value of content can be maintained by adding new research results and case studies.

It's also important to explicitly display the update date and set the schema markup's “dateModified” property appropriately. AI may reference this information to assess how current the content is.

To measure the effectiveness of updates, it is recommended that you track the following metrics:

• Citation frequency in AI mode: use tools like Google Search Console to track traffic and impressions from AI mode

•Changes in search performance before and after content updates

• Changes in user engagement metrics (time spent, bounce rate, etc.)

• Increase or decrease in shares and mentions on social media

According to “The LLMO White Paper,” content updates aren't just date changes; they're about adding real value. For example, adding new data, introducing the latest case studies, and providing new perspectives will lead to improved evaluations using AI.

Effective incorporation of topical elements is also an important strategy for keeping content current. Content can be made more relevant and fresh by properly incorporating topical elements.

According to the noveltyinc.jp article, AI tends to place particular emphasis on “information related to the current situation.” This is because users often seek answers based on the most recent situation.

To effectively incorporate topical elements, the following approaches are effective.

First, we regularly update the latest industry trends and statistics. For example, it is possible to appeal the freshness of content by explicitly showing the latest data, such as “According to data for the 2nd quarter of 2025, users using AI mode have reached 42% of all search users.”

Next, correlate recent events and announcements. “Google's algorithm update in June 2025 changed how content is evaluated in AI mode. You can enhance the topicality of your content by relating topics with recent events, such as “More specifically...”

It's also effective to reflect seasonal factors and current trends. “As a trend in summer 2025, searches related to 'sustainable travel' are increasing, particularly in AI mode. Content can be made more relevant by incorporating current trends, such as “In order to respond to this...”

Additionally, when incorporating topical elements, it is important to specify the date and time of year. By clarifying the point in time of the information, such as “according to the latest information as of 2025/6...”, readers (and AI) can accurately assess the freshness of that information.

The strategic use of the “last update date” is also an effective method for appealing the freshness of content. The date of last update is more than just a formal display; it is an important indicator of the freshness and reliability of content for both AI and users.

According to an article on mediareach.co.jp, there is a possibility that AI will evaluate the freshness of content by referring to “dateModified” (last update date) information. More recently updated content tends to be preferred, especially when similar information comes from multiple sources.

In order to strategically utilize the last update date, the following approach is effective.

First, display the date of the last update at the beginning of the content or in a prominent position. By clearly indicating the date, such as “Last updated: June 5, 2025,” you can immediately convey the freshness of the content.

Next, set the schema markup's “dateModified” property appropriately. This allows AI to mechanically understand how current content is.

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "LLMO対策の完全ガイド",

"datePublished": "2025-01-15",

"dateModified": "2025-06-05",

...

}

</script>Also, when a major update has been made, it is effective to specify the details of the update. By showing update points, such as “[2025/6 update] The latest algorithm changes in AI mode have been reflected,” it is possible to attract readers' interest and encourage them to return.

Additionally, consider setting up a section to record update history. In particularly highly specialized content, ongoing maintenance and reliability of content can be promoted by transparently showing the history of changes, such as “update history: 2025/6/5 - latest trends in AI mode added, 2025/4/10 - case study updated.”

Regularly updating content and keeping it current is important to view it as an ongoing process rather than a one-time measure. By optimizing update frequency, incorporating current events, and strategically utilizing the last update date, it will be possible to ensure continuous visibility even in the AI mode era.

4-2. Building brand authority and awareness

In the AI mode era, brand authority and recognition are not simply marketing assets, but are important judgment materials for evaluating and citing information using AI. This is because AI tends to prioritize information from “reliable information sources,” and brand authority is one of the criteria for judging it. As a medium- to long-term LLMO strategy, building brand authority and awareness is essential to ensure sustainable visibility.

Establishing and disseminating expertise within the industry is the foundation for building brand authority. It's important to establish your position within the industry by not only claiming to be an “expert,” but by continuously disseminating substantial expertise and insight.

According to “The LLMO White Paper,” AI in particular tends to preferentially reference information from “experts and organizations that are widely recognized in that field.” This is similar to the criteria human experts use when citing academic papers and technical books.

The following approaches are effective for establishing expertise within the industry.

First, we will conduct and present original research and surveys. For example, independent surveys such as the “2025 LLMO Effectiveness Measurement Survey: Analysis of Implementation Results at 100 Sites” are a powerful means of appealing brand expertise and advancement. Survey results can be disseminated in multiple formats such as blog posts, white papers, and webinars to gain wider recognition.

Next, we will actively speak at industry conferences and events. Taking the stage at famous events such as the “AI Search Summit 2025” is an effective way to make a brand's expertise publicly recognized. You can maximize the value of the lecture by publishing it as a blog post or video at a later date.

Also, contributions to specialized media and trade magazines are important. Regular contributions to well-known media such as Search Engine Journal, Moz Blog, and Web Personnel Forum have the effect of making the brand's expertise widely recognized. It is important that these contributions provide unique perspectives and practical insights.

Additionally, active contributions in professional communities are effective. Answering questions and participating in discussions on Reddit, Stack Overflow, and industry-specific forums is an opportunity to showcase your expertise. However, it is important to try to provide truly valuable information rather than just advertising your company.

Getting mentions and backlinks from other authoritative sites is also an important factor in building brand authority. Mentions and links from other reliable websites act as “third-party recommendations” indicating the brand's trustworthiness and authority.

According to an article on note.com/ gtminami, AI tends to judge “information that is frequently quoted from other reliable sources” as more reliable. This is a principle similar to how “number of citations” in the academic world is an indicator showing the influence of a paper.

To get mentions and backlinks from authoritative sites, the following approaches are effective.

First, create content worth quoting. Providing valuable content that other sites will want to cite, such as original research, comprehensive guides, and specialized analysis, is basic. For example, comprehensive resources such as “The Complete Guide to LLMO Countermeasures: 100 Implementation Examples and Effectiveness Measurements” are likely to be consulted from many sites.

Next, we'll do strategic outreach. After creating high-value content, we carefully approach bloggers, journalists, and influencers in relevant industries and introduce the content. At this time, it is important to clearly communicate your value to the other party.

Guest posts and collaborative research are also effective. By collaborating with other authoritative sites and brands to create content that leverages mutual expertise, you can get backlinks and mentions in a natural form.

Furthermore, we will also consider content creation using the PRACTICE method (Problem Recognition, Authority, Timely, Interest, Celebrity, Education). Content based on this framework has characteristics that make it easy to be picked up by media and other sites.

Brand consistency and the importance of storytelling are also factors not to be overlooked in building authority. A consistent brand message and story enhances brand memorability and credibility, and establishes a clear brand image for both AI and users.

In the YouTube “SEO Measures in the Age of AI Mode” video, it is pointed out that “AI has the potential to recognize and evaluate brand consistency.” This suggests that brands with consistent messages and expressions may be perceived as more “reliable sources of information.”

To enhance brand consistency and storytelling, the following approaches are effective.

First, establish a clear brand voice and tone. Define a consistent style of expression that reflects the brand's personality, such as whether formal or friendly, professional or public, and apply it to all content.

Next, clearly define the brand story and communicate it consistently across various points of contact. Stories that include elements such as “why does this brand exist,” “what values do they have,” and “what kind of future they are aiming for,” give the brand a human aspect and depth.

Also, consistency in visual identity is important. Consistency in visual elements such as logos, color schemes, typography, and illustration styles enhances brand awareness and memorability.

Furthermore, we will continue to disseminate content specific to the brand's areas of expertise. A consistent content strategy that focuses on a specific field strengthens the brand's expertise and authority in that field. For example, in order to establish positioning as an “expert in AI mode countermeasures,” it is effective to continuously disseminate content related to AI mode.

Building brand authority and awareness is not something that can be achieved in a short period of time, but it is possible to increase brand value and influence over the medium to long term through establishing expertise within the industry, gaining mentions from authoritative sites, and consistent branding and storytelling. These efforts will be the foundation for ensuring sustainable visibility in the AI mode era.

4-3. Building a data-driven LLMO strategy

In the AI mode era, decision-making based on data is essential in order to construct and improve an effective LLMO strategy. More effective LLMO countermeasures can be realized by collecting and analyzing actual data rather than feeling or guessing, and adjusting strategies based on that. It's important to build a data-driven LLMO strategy with a medium- to long-term perspective.

How to track citations and references in AI mode is the most basic and important element in measuring the effectiveness of LLMO strategies. By understanding the extent to which your company's content is being quoted and referenced by AI, you can evaluate the effectiveness of your strategy and identify improvements.

According to the mediareach.co.jp article, the following method is effective for tracking citations and references in AI mode.

First, the use of Google Search Console is basic. In 2025, Google Search Console added an “AI mode analysis” function, and now provides information such as number of views in AI mode, number of clicks, and quoted URLs. It's important to check this feature regularly and track performance in AI mode.

Next, consider using a dedicated LLMO tracking tool. In 2025, multiple tools to professionally track citations and references in AI mode have appeared. For example, tools such as “AI Visibility Tracker” and “LLMO Analytics” provide a function to comprehensively track citation status on multiple AI platforms (Google, Bing, ChatGPT, Claude, etc.).

Also, “manual checks,” which ask questions about the company's brand or specific content directly to AI and test whether the answers include company information, are also effective. For example, ask questions such as “what is LLMO” and “effective LLMO countermeasures” to Google's AI mode or ChatGPT and check whether your content is quoted. At this time, more comprehensive results can be obtained by testing on different devices, locations, and user profiles.

Additionally, analyzing referrer traffic is important. Track traffic from AI platforms with tools like Google Analytics. In 2025, many AI platforms will provide unique referrer information, and traffic sources can be identified with identifiers such as “from: google-ai-mode” and “source: chatgpt-browse.”

The optimization process through A/B testing is also an important component of a data-driven LLMO strategy. By scientifically comparing and verifying different approaches, it is possible to identify the most effective strategies and continuously improve them.

According to “The LLMO White Paper,” A/B testing in LLMO requires a different approach than conventional SEO. A special test design that takes into account the information processing characteristics of AI is required.

For effective A/B testing, the following approaches are effective.

First, clearly define what you want to test. For example, we design tests that focus on specific elements, such as “how to express definition sentences,” “heading structure,” and “presence or absence of schema markup.” If you change multiple elements simultaneously, you won't be able to determine which changes had an effect, so it's important to keep the elements changed to a minimum at once.

Next, ensure sufficient sample size and time period. There is a possibility that citations and references in AI mode fluctuate greatly compared to conventional search results. Therefore, it is recommended that sufficient data be collected over a period of at least 2 to 4 weeks.

It also clearly separates control groups and test groups. For example, prepare two similar pages on the same topic, and apply traditional methods of expression to one and a new approach to the other. By comparing the performance of the two, you can identify a more effective method.

Additionally, it tracks multiple metrics. The overall effect can be evaluated by analyzing not only citation frequency in AI mode, but also click rate, time spent, conversion rate, etc.

Competitive analysis and industry benchmarking are also key elements in a data-driven LLMO strategy. By analyzing the approaches of competitors and industry leaders and learning best practices, you can enhance your strategy.

According to the noveltyinc.jp article, LLMO competitive analysis requires a different perspective from conventional SEO competitive analysis. In particular, analysis focusing on how easy it is to be quoted and referred to by AI is important.

For effective competitive analysis and industry benchmarking, the following approaches are effective.

First, identify your main competitors. Competition in traditional SEO and competition in LLMO may not necessarily match. In order to identify frequently cited sources in AI mode, ask questions about your company's key keywords and topics to the AI and investigate which sites are cited.

Next, analyze your competitors' content structures. In particular, the content structure (usage of headings, paragraph structure, expression of definition sentences, etc.) of competitor sites that are frequently quoted in AI mode is analyzed in detail, and effective patterns are identified.

Also, check the competitors' schema markup implementation status. Examine the page's source code and analyze what schema types are used and what attributes are included.

Plus, it tracks trends across the industry. Check back regularly for LLMO-related conferences, webinars, industry reports, and more to learn the latest best practices and best practices.

Building a data-driven LLMO strategy is important to be viewed as a continuous improvement process rather than a one-time effort. By constantly evaluating and improving strategies through tracking citations and references in AI mode, optimization through A/B testing, competitive analysis, and industry benchmarking, it will be possible to ensure sustainable visibility in the AI mode era.

Although the immediate effects of medium- to long-term LLMO strategies are low compared to short-term measures, they have sustainable effects. It is possible to establish a competitive advantage in the AI mode era by regularly updating content and maintaining freshness, building brand authority and awareness, and building a data-driven LLMO strategy. In the next chapter, we'll take a closer look at actual LLMO success stories and lessons learned from failure.

5. LLMO Success Stories and Lessons Learned from Failure

5-1. LLMO success stories from domestic and international companies

LLMO countermeasures in the AI mode era are still developing fields, but many companies have already carried out advanced initiatives and achieved results. By analyzing these success stories, you can gain common ground and practical tips for effective LLMO strategies. Here, we will introduce specific LLMO success stories from domestic and international companies, and explain the strategies and success factors behind them.

The structured data strategy of a major e-commerce company is a typical example showing the effectiveness of LLMO measures. A major Japanese e-commerce company implemented thorough structured data on all product pages as a countermeasure against AI mode from the beginning of 2025.

The company implemented not only the traditional basic Product schema, but also extended schema markup containing more detailed attribute information (material, size, weight, manufacturing location, warranty information, etc.). Furthermore, user reviews were also structured using the Review Schema, and product evaluation information was provided in a format that was easy for AI to understand.

As introduced in the YouTube “SEO Measures in the AI Mode Era” video, as a result of this initiative, the company's product information is now frequently quoted in Google's AI mode. In particular, “What is the most highly rated 00 product?” “What would you recommend for a △△ made of zero material?” In response to questions such as these, the company's products began to be introduced preferentially.

The company's digital marketing manager said, “The inflow from AI mode now accounts for 15% of total search traffic 3 months after implementation. Additionally, users via AI mode tend to have 23% higher conversion rates than regular searches.”

An important point that can be learned from this case study is that implementation of extended attributes that convey the characteristics of products and services in detail, rather than simply basic schema markup, greatly contributes to improving visibility in AI mode. It also suggests the importance of providing social proof such as user reviews as structured data.

The E-E-A-T enhancement approach for medical information sites is another notable success story. Major American medical information sites have adopted a strategy to thoroughly enhance E-E-A-T (experience, expertise, authority, and reliability) of content as a countermeasure against AI mode.

The company added detailed profiles of doctors and specialists to every medical article, and specified their field of expertise, years of experience, qualifications, institutions, etc. Furthermore, a “medical review date” was specified in each article, indicating that the content is updated regularly. Furthermore, links to primary information sources (medical papers, clinical trial results, public agency announcements, etc.) were added to all medical information to ensure the reliability of the information.

As pointed out in “The LLMO White Paper,” as a result of this initiative, medical information on the site is now frequently quoted in large-scale language models such as Google's AI mode and ChatGPT. In particular, “What are the symptoms of 00?” Information on the same site is now preferentially referred to in response to medical-related questions such as “the latest information on treatment methods for △△.”

The company's content strategy manager said, “After the introduction of the AI mode, we were initially concerned about a decrease in search traffic, but in reality, new traffic via AI mode was created, and the overall increase was 15%. In particular, we saw a clear correlation where articles with enhanced expert profiles had higher citation rates in AI mode.”

An important point that can be learned from this case is that E-E-A-T elements are extremely important, especially in the YMYL (Your Money, Your Life: having a significant impact on life, such as money and health) field. Explicit expert involvement, regular updates of information, and references to reliable sources will greatly contribute to increased visibility in AI mode.

Technology blog passage optimization strategies are another great example of an effective LLMO strategy. A popular technology blog based in Silicon Valley has made an effort to strategically arrange “passages that are easy to be quoted” as a countermeasure against AI mode.

This blog has a “TL; DR (Too Long; Didn't Read)” section at the beginning of each article to briefly summarize the main points of the article. Also, a “point” box was placed at the beginning of each section, and the core of that section was expressed in 1-2 sentences. Additionally, clear definitions of key concepts and techniques were provided in prominent formats (such as boxes and different background colors).

As explained in the noveltyinc.jp article, as a result of this initiative, information on the blog is now frequently quoted in AI mode. In particular, there has been an increase in the number of cases where definitions and the contents of the “point” box are quoted as they are.

The editor-in-chief of the blog said, “In the past, we were focusing on providing detailed technical explanations, but we realized that 'ease of being quoted is also an important factor in the AI mode era. We are now able to deliver value to both AI and human leaders by providing both brief expressions that get to the core and detailed explanations.”

An important point we can learn from this case study is the importance of strategically designing and arranging passages that are easily quoted by AI. In particular, definitions, summaries, sentences that state points in a concise manner, etc. are in a format that makes it easy for AI to cite, and it is effective to provide these in a form that is easy to distinguish visually.

Local LLMO strategies for regional businesses are another interesting success story. A small to medium sized restaurant group in Tokyo has developed a region-specific LLMO strategy as a countermeasure against the AI mode.

This restaurant group implemented a detailed LocalBusiness schema on each store's web page and provided business hours, locations, menu information, reservation methods, etc. as structured data. Furthermore, detailed information on regional specialties and ingredients, the chef's background and expertise, and commitment to cooking were explained with a clear heading structure and brief sentences. In addition, regular menu updates and seasonal food recommendations were added to maintain the freshness of the content.

As mentioned in the mediareach.co.jp article, as a result of this initiative, search queries related to the region, such as “recommended restaurants in the 00 district of Tokyo” and “△△ popular restaurants in Tokyo,” are now preferentially introduced in AI mode.

A marketer in the same group said, “Differentiation from major chain stores was an issue, but exposure in AI mode increased due to the LLMO strategy, which brought regionality and expertise to the forefront. In particular, they are often recommended in searches such as 'authentic 00 cuisines nearby, 'leading to the acquisition of new customers.”

An important point that can be learned from this case study is that provision of region-specific information and thorough implementation of structured data are effective for regional businesses. In particular, detailed implementation of the LocalBusiness schema and provision of specialized information related to the region will greatly contribute to improving visibility in AI mode for local searches.

From these success stories, we can see that effective LLMO strategies have the following in common:

1. Implement thorough structured data

2. Explicit enhancements to E-E-A-T elements

3. Strategic arrangement of passages that are easy to cite

4. Emphasize expertise and uniqueness

5. Regular content updates and keeping it fresh

By incorporating these elements into the company's LLMO strategy, it will be possible to increase the possibility of improving visibility in the AI mode era.

5-2. Key lessons learned from failure cases

In order to successfully implement LLMO measures, it is important not only to learn from success stories, but also to learn important lessons from failure cases. Here, we will analyze typical failure cases in the AI mode era and explain the lessons we can learn from them. By understanding and avoiding these mistakes, you'll be able to build a more effective LLMO strategy.

The trap of excessive keyword optimization is a typical failure pattern seen in companies that have not been able to break away from conventional SEO thinking. A major information site adopted a strategy to enhance the conventional SEO approach as a countermeasure against the AI mode.

This site focused on conventional keyword optimization, such as increasing the density of specific keywords, repeatedly using the same keywords in titles and headlines, and unnaturally packing related keywords. However, this effort did not lead to improved visibility in AI mode, but rather had an adverse effect.

As warned in the YouTube “SEO Measures in the Age of AI Mode” video, AI emphasizes contextual understanding and semantic relevance rather than simple keyword matching. Excessive keyword optimization impairs the naturalness and readability of content, and there is a possibility that AI will lower ratings.

The person in charge of SEO at the same site said, “We saw an inverse correlation where the higher the keyword density, the lower the citation rate in AI mode. AI sees through our intentions and tends to prioritize natural, information-valuable content over unnaturally optimized content.”

The lesson we can learn from this failure is that in the AI mode era, it is necessary to break away from conventional keyword-centered SEO thinking. Rather than simple repetition of keywords, it is important to create content that emphasizes comprehensive coverage of topics, natural sentence expression, and semantic relevance.

Mass production approaches with shallow content are also typical failure patterns in the AI mode era. A mid-sized news site adopted a strategy of generating and publishing a large number of articles in a short time as a countermeasure against AI mode.

This site used AI tools to generate a large number of articles in a short period of time, and published many articles with similar content for each keyword variation. However, since these articles were shallow and lacked unique insights or specialized analysis, they were rarely cited in AI mode.

As pointed out in “The LLMO White Paper,” AI tends to place particular emphasis on “depth and uniqueness of information.” Rather than repetition of superficial information, they prioritize referencing content containing deep insights and specialized analysis.

The person in charge of editing the site said, “I was keenly aware that quality is more important than quantity. It became clear that 10 deep articles had a higher citation rate in AI mode than 100 shallow articles. We are now shifting our policy to focus on high-quality content, including expert insights and independent research.”

The lesson we can learn from this failure is that in the AI mode era, “quality and depth of information” is more important than “quantity of content.” Rather than mass production of superficial information, providing high-quality content including unique insights, specialized analysis, and primary information will lead to improved visibility in AI mode.

Misuse and overimplementation of structured data are also typical failure patterns on the technical side. A tech blog site adopted a strategy of actively implementing all kinds of schema markup as a countermeasure against AI mode.

The site was implemented by combining a number of schema types regardless of the actual nature of the content. For example, schema types that do not match the content, such as HowTo, Recipe, and FAQPage, were applied to simple blog posts, and evaluations and reviews that did not actually exist were expressed with Review Schemas.

As warned in the note.com/ gtminami article, the use of such improper schema markup could be a violation of Google's spam policy. In fact, the site was temporarily removed from Google's index, and was seriously affected by not being displayed in normal search results, let alone being quoted in AI mode.

The site's developer said, “I've learned that schema markup is not a magic wand, but rather a tool for accurately representing the actual nature of content. Far from providing short-term benefits, improper use results in damage to long-term reliability.”

The lesson we can learn from this mistake is that it's important to properly implement structured data, faithful to the nature of the actual content. The purpose of schema markup is to help AI understand content accurately, and misleading use is counterproductive.

Neglecting user experience is also a serious failure pattern in the AI mode era. One e-commerce site focused so much on AI mode countermeasures that they adopted a strategy that sacrificed the actual user experience.

This site prioritizes structures that are easily understood by AI, so the site design is difficult for users to read and navigate. For example, definitions and explanations for AI were repeated redundantly, and a lot of scrolling was required to arrive at product information desired by users. There was also an issue where page loading speed was drastically reduced due to the priority given to implementing structured data.

As emphasized in the mediareach.co.jp article, even in the AI mode era, the final evaluation criterion is “user experience.” Since AI aims to identify and recommend valuable content to users, AI optimization at the expense of user experience will backfire in the long run.

The person responsible for UX at the site said, “I realized that optimization for AI and improving the user experience are not in conflict and should complement each other. Currently, while maintaining a structure that is easily understood by AI, we are shifting to a policy that gives top priority to ease of use and value provision for users.”

The lesson we can learn from this failure is that AI mode countermeasures should only be positioned as part of improving the user experience. AI-optimized content should be both valuable and easy to use for users.

Lack of consistency and a short-term approach are also typical failure patterns on the strategic side. The corporate site of a small business adopted a strategy to respond sensitively to trends and test short-term measures one after another as a countermeasure against AI mode.

The site changed its strategy each time new LLMO-related articles and videos were published, and continued its inconsistent approach. We repeated measures that lacked a long-term perspective, such as focusing on structured data at one point, switching to mass production of content, and then concentrating on specific keywords the next.

As pointed out in the noveltyinc.jp article, AI mode countermeasures are not short-term technical tricks, but rather a long-term process of providing value and building trust. An inconsistent short-term approach would send a signal of confusion to both AI and users.

The site's marketer said, “I've learned that a consistent strategy based on the company's strengths and expertise is important rather than being swayed by immediate trends. Currently, we have formulated a 3-year long-term LLMO strategy and are shifting to a policy of implementing it in stages.”

The lesson we can learn from this mistake is that it is important to view AI mode countermeasures as long-term strategies rather than short-term hacks. Consistent brand messaging, focus on specialized fields, and continuous value delivery will lead to sustainable visibility in the AI mode era.

From these failure cases, the following points emerge as the main pitfalls to be avoided in the AI mode era.

1. Adherence to conventional keyword-centered SEO thinking

2. Mass production with shallow content

3. Misuse of structured data and overimplementation

4. Neglecting the user experience

5. Lack of Consistency and Short-Term Approach

By recognizing and avoiding these pitfalls, you'll be able to build a more effective LLMO strategy.

5-3. Practical LLMO Preparation Checklist

Based on the analysis of success stories and failure cases so far, we will provide a practical checklist for implementing effective LLMO measures. This checklist summarizes specific action items for improving visibility in the AI mode era. By checking and implementing each item in sequence, it will be possible to proceed with systematic LLMO measures.

Content structure and clarity checkpoints are fundamental elements for making it easier for AI to understand and evaluate content. Let's check the following items.